AI Testing: How to Structure Proof of Concept Without Wasting Budget

Discover how to effectively structure your AI proof of concept to avoid costly failures, ensuring feasibility, value, and a smooth transition from prototype to production.

AI Testing: Structure Proof of Concept Without Wasting Budget

Many companies find themselves trapped in endless pilots without clear outcomes. The harsh reality is that 95% of generative AI pilots fail, with 62% of AI pilots in education alone never reaching production. The difference between successful AI implementations and costly failures often comes down to one critical factor: how you structure your proof of concept (PoC) while ensuring effective budget management.

This guide explains how to structure proofs of concept that answer the right questions—feasibility, cost, and value—while distinguishing exploratory prototypes from production systems and showing how to move from one to the other deliberately.

What Makes AI Testing Different from Traditional Software Testing

AI testing requires a fundamentally different approach than traditional software development. Unlike conventional applications with predictable inputs and outputs, AI systems introduce unique risks including adversarial manipulation, bias, and unpredictable behavior patterns.

A proof of concept in AI is a time-bound, narrow-scope validation exercise designed to answer specific feasibility questions before committing significant resources to full development. The goal isn't to build a perfect system—it's to gather enough evidence to make an informed decision about whether to proceed, pivot, or abandon the initiative.

Traditional software testing focuses primarily on functionality and performance. AI testing, however, must evaluate trustworthiness, bias, data quality, and model reliability. This multidisciplinary approach requires integrating security considerations with broader trustworthiness properties from the very beginning of your PoC.

Two contrasting scenes, left side showing a traditional software testing environment with orderly gears and cogs, right side depicting AI...

The key distinction lies in uncertainty management. Traditional software projects operate with relatively known variables, while AI projects must account for data variability, model performance fluctuations, and evolving regulatory requirements. Your PoC structure must reflect these realities.

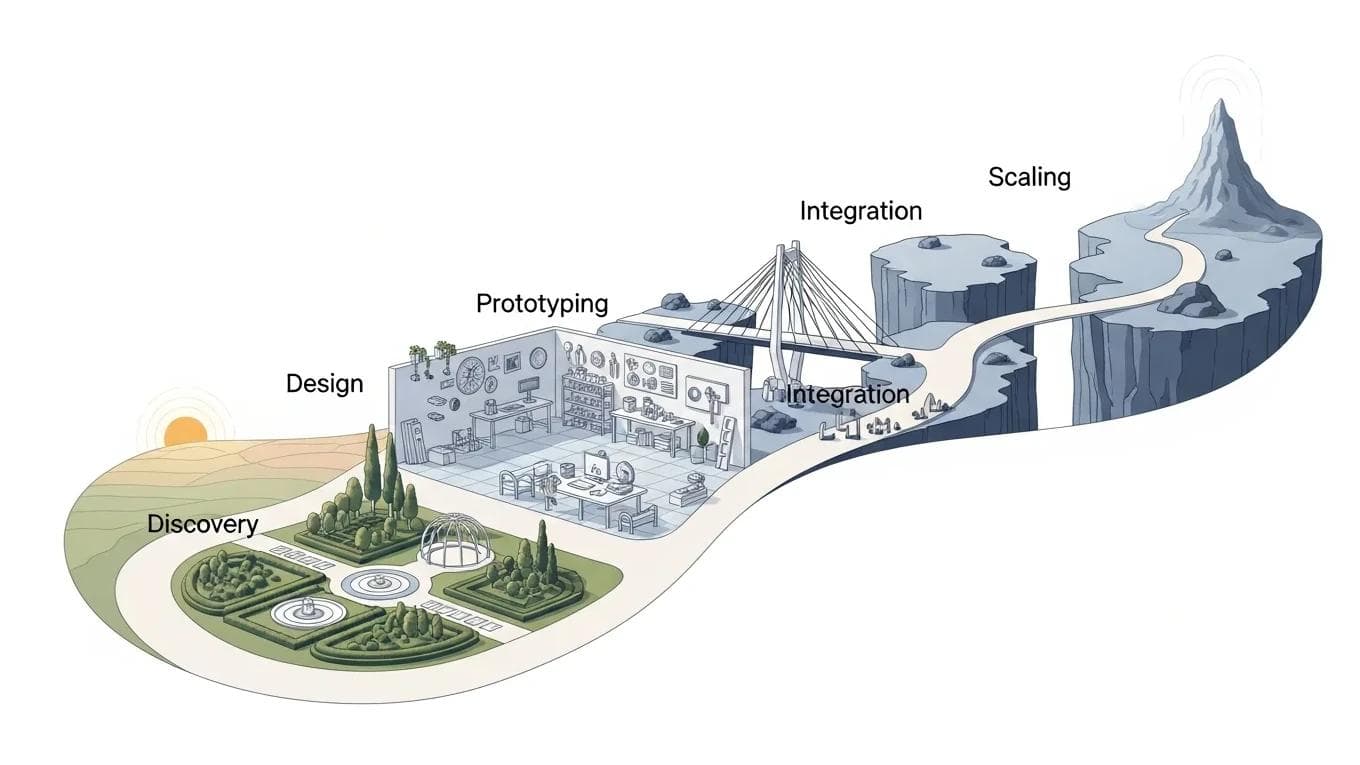

The Two Types of AI Validation: Exploratory vs. Production-Ready

Understanding the difference between exploratory prototypes and production-ready systems is crucial for budget management and timeline planning. These aren't just different phases—they're fundamentally different types of validation with distinct purposes, timelines, and success metrics.

Exploratory Prototypes: Answering "Can We?"

Exploratory prototypes focus on technical feasibility and concept validation. They typically run for 2-4 weeks with minimal infrastructure requirements and use sample datasets. The primary questions they answer include:

- Can the AI model perform the basic task with available data?

- What level of accuracy can we realistically achieve?

- Are there any fundamental technical blockers?

- What data quality issues need addressing?

Exploratory prototypes should consume no more than 10-15% of your total AI project budget. They're designed for rapid iteration and learning, not for demonstrating production capabilities.

Production-Ready PoCs: Answering "Should We?"

Production-ready PoCs operate in environments that closely mirror real-world conditions. They typically require 6-12 weeks, involve actual user interactions, and test scalability, security, and integration capabilities. These PoCs answer business-critical questions:

- Will users actually adopt this solution?

- Can the system handle expected load and usage patterns?

- What are the true operational costs?

- How does this integrate with existing workflows?

Production-ready PoCs should represent 25-35% of your total project budget because they require more robust infrastructure, security considerations, and user testing protocols.

Two parallel timelines, left timeline with small blocks and simple gears exploratory phase, right timeline with larger blocks and complex...

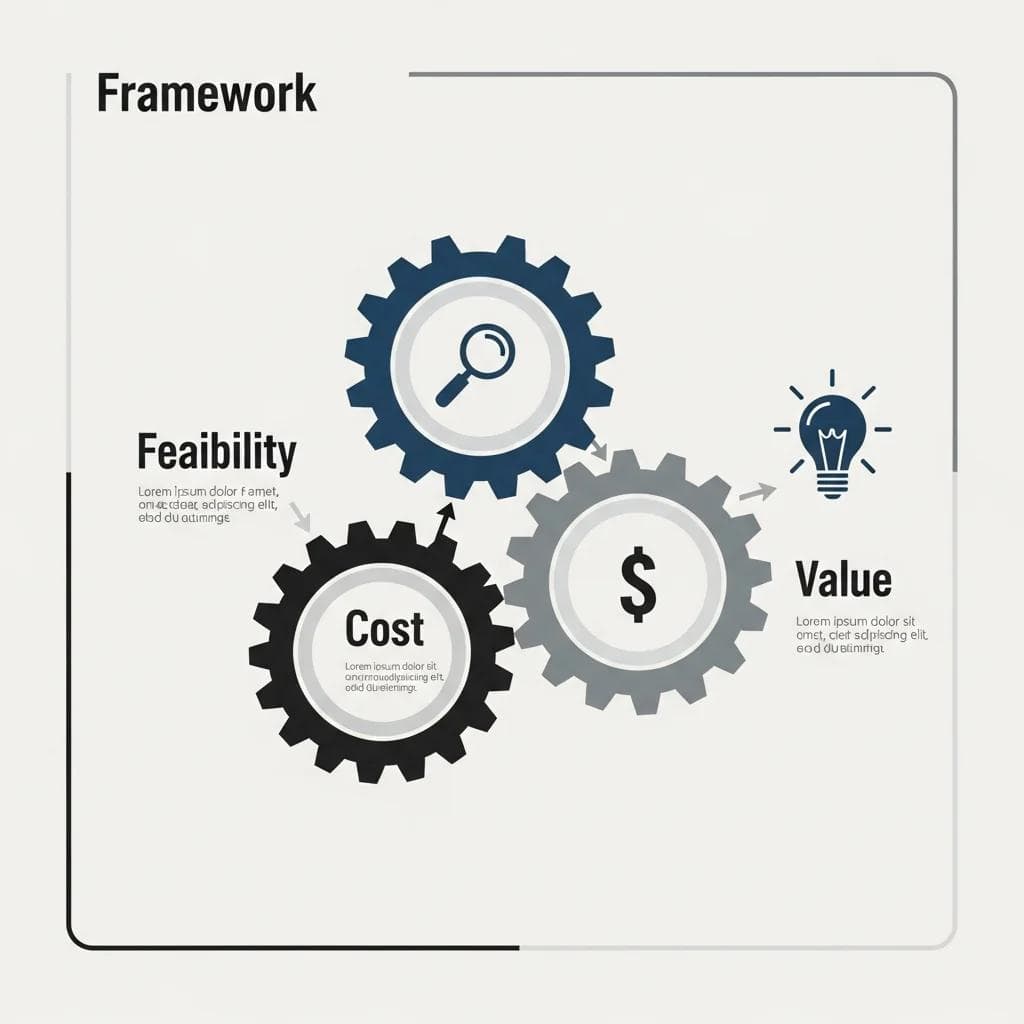

Framework: The Three-Question PoC Structure

Every successful AI proof of concept must answer three fundamental questions with measurable outcomes. This framework prevents the endless pilot trap by creating clear decision points and exit criteria.

Question 1: Feasibility - "Can This Work?"

Feasibility assessment focuses on technical viability and data readiness. Your PoC should establish specific, measurable criteria such as:

- Accuracy thresholds: Define minimum acceptable performance levels (e.g., "The AI chatbot must answer 70% of customer FAQs correctly")

- Data requirements: Quantify data volume, quality, and accessibility needs

- Technical constraints: Identify integration requirements and system dependencies

- Regulatory compliance: Assess legal and compliance implications

A feasibility assessment typically requires 2-4 weeks and should cost between $15,000-$50,000 for most enterprise applications. This investment provides clarity on whether to proceed with more extensive testing.

Question 2: Cost - "What Will This Really Cost?"

Cost analysis extends beyond initial development to include operational expenses, maintenance, and scaling requirements. Your PoC should generate data on:

- Infrastructure costs: Computing resources, storage, and bandwidth requirements

- Human resources: Ongoing monitoring, maintenance, and improvement needs

- Integration expenses: API costs, data pipeline development, and system modifications

- Compliance costs: Security audits, regulatory compliance, and governance requirements

Traditional budgeting methods often underestimate AI project costs by 40-60% because they fail to account for data preparation, model retraining, and ongoing optimization needs. Your PoC should stress-test these assumptions with real usage patterns.

Question 3: Value - "Will This Deliver ROI?"

Value validation requires measuring actual business impact, not just technical performance. Establish metrics that directly correlate to business outcomes:

- Efficiency gains: Time savings, process automation, and resource optimization

- Revenue impact: Customer acquisition, retention improvements, and new revenue streams

- Risk reduction: Error prevention, compliance improvements, and decision quality

- User adoption: Actual usage rates, user satisfaction, and workflow integration

Value validation is the most critical and often overlooked component of AI PoCs. Without clear business impact measurement, even technically successful projects fail to justify continued investment.

Three interconnected gears, each gear symbolizing a question in the framework, one gear with a magnifying glass for feasibility, another...

Budget Allocation: How to Distribute Resources Effectively

Effective budget management for AI testing requires a structured approach that aligns spending with validation objectives. Based on analysis of successful AI implementations, optimal budget distribution follows a specific pattern that maximizes learning while minimizing risk.

The 40-30-20-10 Budget Rule

40% - Data and Infrastructure: The largest portion of your PoC budget should address data preparation, quality assessment, and basic infrastructure setup. This includes data cleaning, labeling, storage, and initial model training environments.

30% - Development and Testing: Allocate this portion to actual model development, testing protocols, and initial integration work. This covers developer time, testing tools, and basic user interface development.

20% - Evaluation and Analysis: Reserve this budget for comprehensive evaluation, including user testing, performance analysis, and business impact assessment. This often-underestimated component determines PoC success.

10% - Contingency and Learning: Maintain a buffer for unexpected challenges, additional data requirements, or extended testing periods. AI projects frequently encounter unforeseen complications.

A pie chart divided into four segments, largest segment in blue, second largest in green, third in orange, smallest in red, segments arra...

Cost Control Strategies

Time-boxing prevents scope creep, which affects 73% of AI pilot projects. Establish firm deadlines for each PoC phase and resist the temptation to extend timelines for marginal improvements. If your PoC cannot demonstrate value within the allocated timeframe, it's unlikely to succeed at scale.

Vendor evaluation should occur early in the process. Rather than building everything from scratch, assess whether existing AI solutions can meet your needs. This build-versus-buy analysis can save 50-70% of development costs while reducing implementation risk.

Cloud-based infrastructure provides cost flexibility for PoCs. Use scalable cloud services that allow you to pay only for actual usage during testing phases. This approach typically reduces infrastructure costs by 40-60% compared to on-premises solutions.

Red Flags: When to Stop and Pivot

Recognizing failure signals early prevents budget waste and allows for strategic pivots. Successful organizations establish clear exit criteria and aren't afraid to terminate unsuccessful pilots quickly.

Technical Red Flags

Poor data quality that requires extensive preprocessing often indicates fundamental challenges. If your PoC reveals that 60% or more of available data requires significant cleaning or augmentation, consider whether the business case justifies this investment.

Accuracy plateaus below acceptable thresholds signal potential model limitations. If performance improvements stall despite additional training data or model adjustments, you may have reached the practical limits of current AI capabilities for your use case.

Integration complexity that exceeds initial estimates suggests architectural challenges. When integration requirements consume more than 40% of your development budget, reassess whether the AI solution provides sufficient value over simpler alternatives.

Business Red Flags

Lack of user adoption during testing phases indicates market fit problems. If target users don't engage with the AI solution during controlled testing, broader adoption is unlikely. This affects 45% of AI implementations that fail to consider user experience adequately.

Unclear or shifting success metrics suggest insufficient business case development. If stakeholders cannot agree on what constitutes success, the PoC lacks the foundation necessary for informed decision-making.

Regulatory or compliance concerns that emerge during testing can derail entire projects. Early identification of these issues allows for strategic pivots before significant resource commitment.

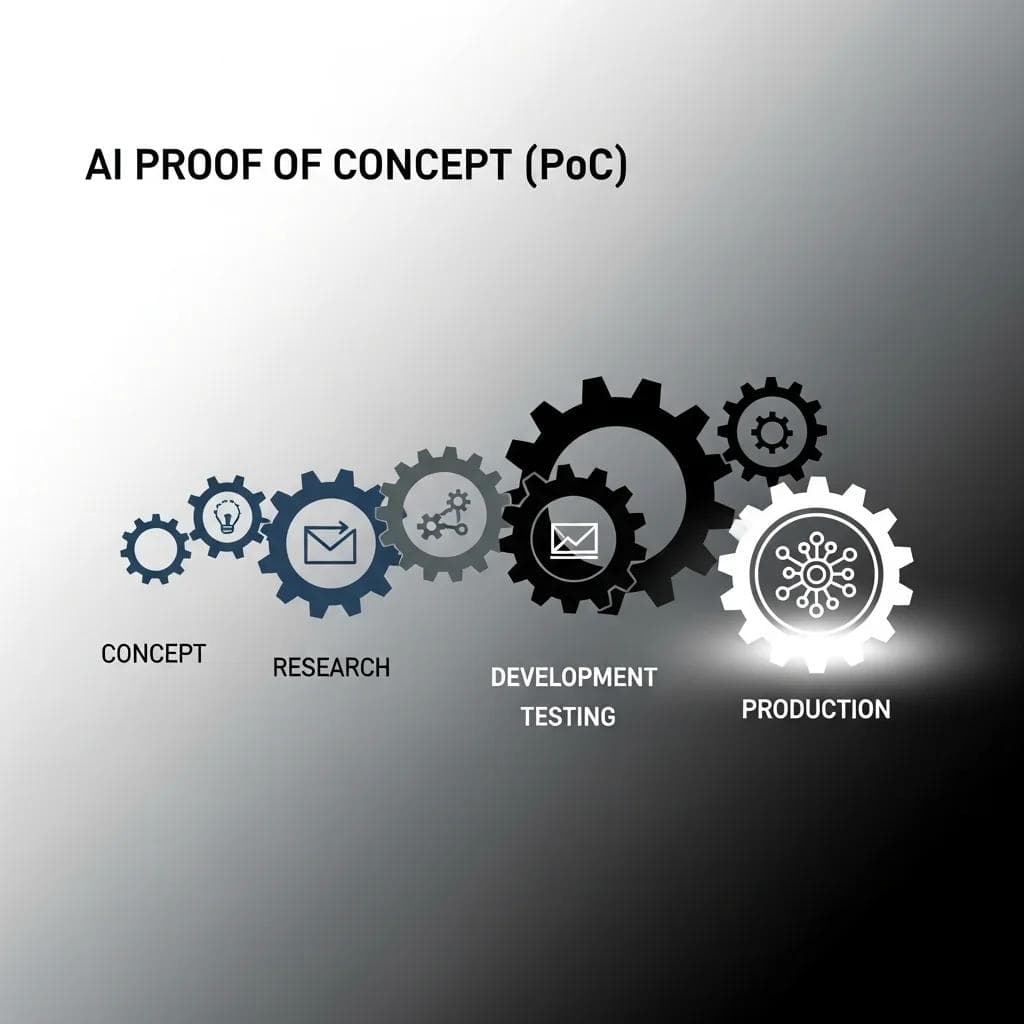

The Production Transition: From PoC to Scalable Solution

Successfully transitioning from proof of concept to production requires deliberate planning and architectural considerations that extend far beyond the initial PoC scope. This transition represents the most critical phase where many AI projects fail due to inadequate preparation.

Infrastructure Scaling Requirements

Production AI systems require 3-5x the infrastructure capacity of PoC environments to handle real-world usage patterns. Your transition plan must account for:

- Load balancing and redundancy: Multiple model instances to ensure availability and performance

- Data pipeline robustness: Automated data processing, validation, and quality monitoring

- Security hardening: Production-grade authentication, encryption, and access controls

- Monitoring and alerting: Real-time performance tracking and automated failure detection

Governance and Compliance Framework

Production AI systems require comprehensive governance structures that extend beyond technical implementation. Establish clear protocols for:

- Model versioning and rollback procedures: Ability to quickly revert to previous versions if issues arise

- Performance monitoring and retraining schedules: Regular assessment of model drift and accuracy degradation

- Audit trails and explainability: Documentation of decision-making processes for regulatory compliance

- Stakeholder communication: Regular reporting on system performance and business impact

Change Management and User Adoption

Successful production deployment requires extensive change management that begins during the PoC phase. Users need training, support systems, and clear value propositions to drive adoption.

Establish feedback loops that capture user experience data and system performance metrics. This information drives continuous improvement and helps identify optimization opportunities that weren't apparent during initial testing.

A series of interconnected gears and cogs, each gear a stage in the AI PoC process, smoothly transitioning from smaller exploratory gears...

Measuring Success: KPIs That Matter

Effective measurement requires both technical and business metrics that provide a comprehensive view of AI system performance and value delivery. Many organizations focus exclusively on technical metrics while ignoring business impact indicators.

Technical Performance Metrics

Model accuracy and precision provide baseline performance indicators, but they must be contextualized within business requirements. A 95% accurate model that fails on edge cases affecting high-value customers may deliver less business value than an 85% accurate model with consistent performance.

Response time and throughput become critical in production environments where user experience depends on system responsiveness. Establish performance benchmarks during PoC testing that reflect real-world usage patterns.

System reliability and uptime directly impact user adoption and business continuity. Production AI systems should maintain 99.5% or higher availability to support critical business processes.

Business Impact Indicators

User adoption rates and engagement metrics provide direct feedback on solution value. Track active users, session duration, and feature utilization to understand how AI capabilities integrate into existing workflows.

Process efficiency improvements quantify operational benefits through metrics like task completion time, error reduction rates, and resource utilization optimization.

Revenue and cost impact measures provide the ultimate validation of AI investment value. Track direct revenue attribution, cost savings, and productivity improvements that result from AI implementation.

How to Test AI Without Wasting Budget

Testing AI efficiently requires a strategic approach that balances innovation with cost control. Here are best practices to ensure your AI testing is both effective and economical:

- Define clear objectives and success metrics before starting any PoC. This prevents unnecessary spending on undefined goals.

- Utilize existing tools and platforms where possible to reduce development costs and accelerate validation.

- Implement time-boxing to keep projects on track and within budget.

- Conduct regular reviews to assess progress and make informed decisions about whether to continue, pivot, or stop.

Frequently Asked Questions

How long should an AI proof of concept take?

An effective AI proof of concept should run 4-8 weeks for exploratory validation and 8-16 weeks for production-ready testing. Shorter timeframes often provide insufficient data for informed decisions, while longer periods typically indicate scope creep or inadequate planning. Time-boxing prevents endless iteration and forces clear decision-making.

What percentage of my AI budget should go to proof of concept?

Allocate 15-25% of your total AI project budget to proof of concept activities. This includes both exploratory prototyping (5-10%) and production-ready validation (10-15%). Organizations that spend less often make uninformed scaling decisions, while those spending more may over-engineer solutions before validating business value.

How do I know if my AI PoC is successful enough to move to production?

Success criteria should be established before beginning your PoC and include technical performance thresholds, user adoption rates, and business impact metrics. Generally, successful PoCs demonstrate 70%+ accuracy on defined tasks, positive user feedback from 80%+ of test participants, and clear path to ROI within 12-18 months of production deployment.

What's the biggest mistake companies make with AI proofs of concept?

The most common mistake is treating PoCs as extended research projects rather than decision-making tools. Companies often continue testing indefinitely without clear success criteria or exit conditions. Successful PoCs have specific timelines, measurable objectives, and predetermined decision points that prevent endless iteration.

Should I build custom AI or buy existing solutions for my PoC?

Evaluate existing solutions first, as they can reduce PoC costs by 50-70% while providing faster validation. Build custom solutions only when existing options cannot meet specific requirements or when competitive advantage depends on proprietary capabilities. Most successful AI implementations combine purchased solutions with custom integration and optimization.

How do I prevent scope creep during AI testing?

Establish firm boundaries around PoC objectives, timeline, and success metrics before beginning development. Create a formal change control process that requires stakeholder approval for any modifications to scope or timeline. Time-boxing is essential—if you cannot demonstrate value within allocated timeframes, the solution likely won't succeed at scale.

What data quality standards should I establish for AI PoCs?

Data should be representative of production conditions, with at least 80% completeness and accuracy for critical fields. Establish data validation protocols, cleansing procedures, and quality monitoring systems during PoC phases. Poor data quality is the leading cause of AI project failure, affecting over 60% of implementations.

How do I measure ROI for AI proof of concepts?

Focus on leading indicators during PoCs: user engagement, task completion rates, accuracy improvements, and time savings. Establish baseline measurements before AI implementation and track improvements throughout testing. Successful PoCs should demonstrate clear paths to positive ROI within 12-18 months of production deployment.

What skills does my team need for effective AI testing?

Successful AI PoCs require multidisciplinary teams including data scientists, software engineers, domain experts, and business analysts. External expertise may be necessary for specialized areas like model optimization, security, or regulatory compliance. Consider partnering with experienced AI consulting firms to accelerate learning and reduce implementation risk.

How do I handle stakeholder expectations during AI pilots?

Set realistic expectations by clearly communicating PoC objectives, timelines, and success criteria. Regular progress updates and transparent reporting prevent misaligned expectations. Emphasize that PoCs are designed to inform decisions, not deliver production-ready solutions. Manage expectations around accuracy, capabilities, and implementation timelines from the beginning.

Conclusion: Validation Is a Stage, Not a Destination

The goal of a proof of concept is to decide—not to linger. Successful organizations treat PoCs as decision-making tools with clear timelines, measurable objectives, and predetermined exit criteria. They resist the temptation to perfect solutions during testing phases and focus instead on gathering sufficient evidence for informed investment decisions.

Effective AI testing requires structured approaches that balance technical validation with business impact assessment. By following the three-question framework—feasibility, cost, and value—and maintaining disciplined budget allocation, organizations can minimize waste while maximizing learning.

Remember that validation is a stage in your AI journey, not the destination. The insights gained from well-structured proofs of concept provide the foundation for successful production deployments that deliver measurable business value. Whether you decide to proceed, pivot, or pause, a properly executed PoC ensures that decision is based on evidence rather than assumptions.

The difference between AI success and failure often comes down to how effectively you structure your initial validation efforts. Invest the time to plan comprehensive PoCs, establish clear success criteria, and maintain disciplined execution. Your budget—and your business outcomes—depend on getting this critical stage right.

For expert guidance on structuring your AI PoCs for success, contact Jaxxon Technology Services today.

More Blog Posts

AI Agents: The Next Generation of Business Software Transforming Operations

Discover how AI agents are revolutionizing business operations by transforming software into proactive collaborators that anticipate needs and streamline complex processes autonomously.

AI Product Development Timeline: How Long Does It Really Take to Build an AI Product?

Discover the structured phases of AI product development to set realistic timelines and expectations, ensuring successful outcomes and avoiding common pitfalls.

AI Agents in Enterprise Workflows: Transforming Business Operations for 2026

Discover how AI agents are revolutionizing enterprise workflows, transforming businesses into proactive, autonomous entities that enhance efficiency and drive measurable value.